Big tech today makes a lot of money on ads. AI companies, despite the millions of users of ChatGPT or Perplexity, do not make any money from ads. Odd, right?

Are $20/mo subscriptions sustainable?

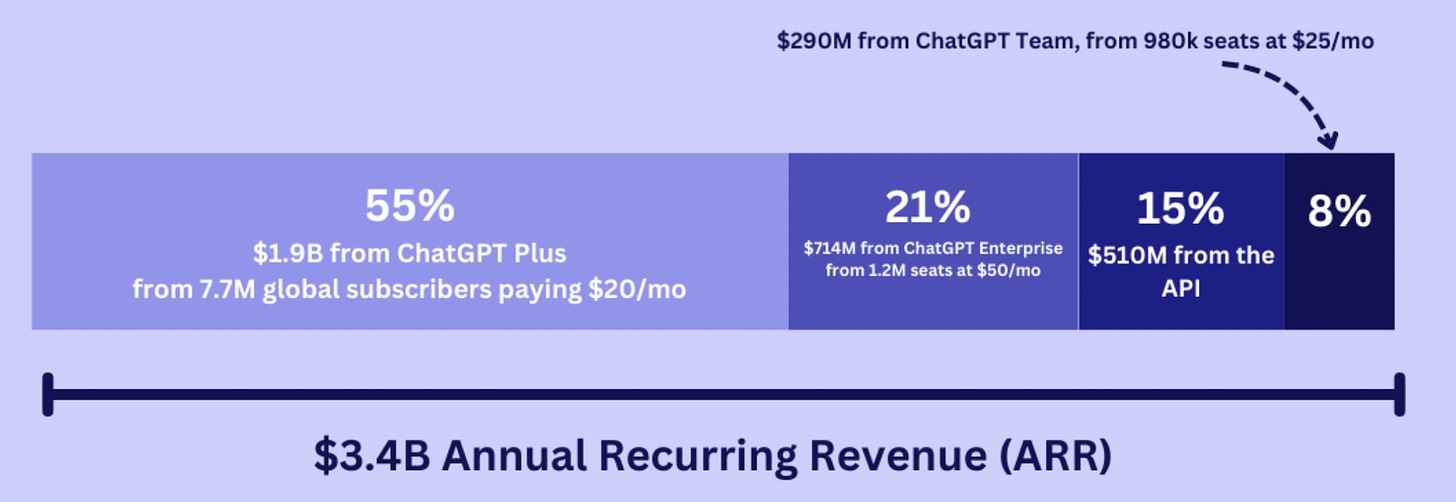

ChatGPT charges $20/mo, more than Amazon Prime, Netflix, or most other mass-adopted consumer subscriptions. It’s hard to argue with 7.7m global subscribers added in the last two years, but I doubt those same 7.7m consumers would pay an incremental $20/mo for Perplexity, Claude, or Character. At a certain point, the consumer only has so much disposable income to spend on AI products.

This feels like the streaming wars: an unprofitable race to the bottom.

If this is the reality then the obvious question is why hasn’t anyone incorporated ads yet? The answer: it’s really hard.

The challenge with ads and LLMs

First, there’s the question of what the ad looks like - a dynamic banner or link based on the query? A suggested search? Or embedded into the LLM’s response.

Dynamic banners wouldn’t be meaningfully different from the way Google does advertising today. This feels like the most realistic starting place but doesn’t feel native to the chat-based format.

Suggested searches are a step closer to feeling LLM native. However, this feels a bit like advertising on page 2 of the Google results. Have you ever been to page 2?

Embedding the advertising into the LLM’s response feels most native to the platform but comes with a host of challenges.

The issues include:

Trust: AI chat bots are a new category. People are already skeptical of hallucinations and advertising could damage consumer trust in the output.

Control: LLM output is hard enough to steer as it is. I’m not certain we know how to safely inject ads into responses.

Latency: Serving ads can’t meaningfully degrade the chat bot’s latency.

Measurement and attribution: Attribution would likely have to look different than Google where someone is looking for a site versus an answer.

Technical architecture: Modifying inference architecture to accommodate ad insertion is non trivial.

Despite this, the payoff from ads feels too big to ignore.

The size of the prize

Sam Altman said at the end of last year that ChatGPT had 100M users. Let’s be conservative and hold that constant and subtract out the 7.7M paying users. That gives us ~92M free users to serve ads to. While Google doesn’t report average revenue per user (ARPU), Meta does and has a blended ARPU of $13.12 worldwide (note this is much higher in developed countries).

If ChatGPT could monetize users similarly, that would equate to $1.2B in additional revenue! If we use the $68 US and Canada ARPU, this figure is $6.25B. And even this number is minuscule in comparison to Google’s $185B run rate revenue search ads business.

It’s worth noting that Netflix and Amazon (just Prime), as comps for a consumer subscription business, had run rate revenues of about $37.5B and $41B, respectively, during the same period. This is less than 25% of Google’s search business (although still considerably more than most companies could dream of). And, for what it’s worth, Netflix recently phased out it’s lowest ad-free subscription tier in favor of an ad-supported one.

Obviously OpenAI and the other model providers are neither in the the content business nor the retail business but it’s an interesting lens on the revenue ceiling of consumer subscription businesses.

Perplexity: The first mover

I’m not the only one who sees the writing on the wall for subscription based AI chat bots. Perplexity seems to be the first mover as they recently announced they’re exploring ads in the “Related” section underneath each response.

According to Perplexity, 40% of their queries come from this section. This will be an interesting test of advertiser interest and consumer reactions to this form of advertising.

If it works, don’t be surprised if other model providers follow fast. The potential benefits are too large to ignore.