Building breakthroughs

New discovery requires new methods. Those methods, regardless of where they're derived, move human knowledge forward.

In my last post, I discussed how AI can help us learn. That post focused on raising the floor for knowledge — how can we use technology to improve outcomes for everyone.

This post will cover how we can raise the ceiling — how can we use technology to push our understanding at the bleeding edges of a given field.

Setting the scene: The knowledge plateau

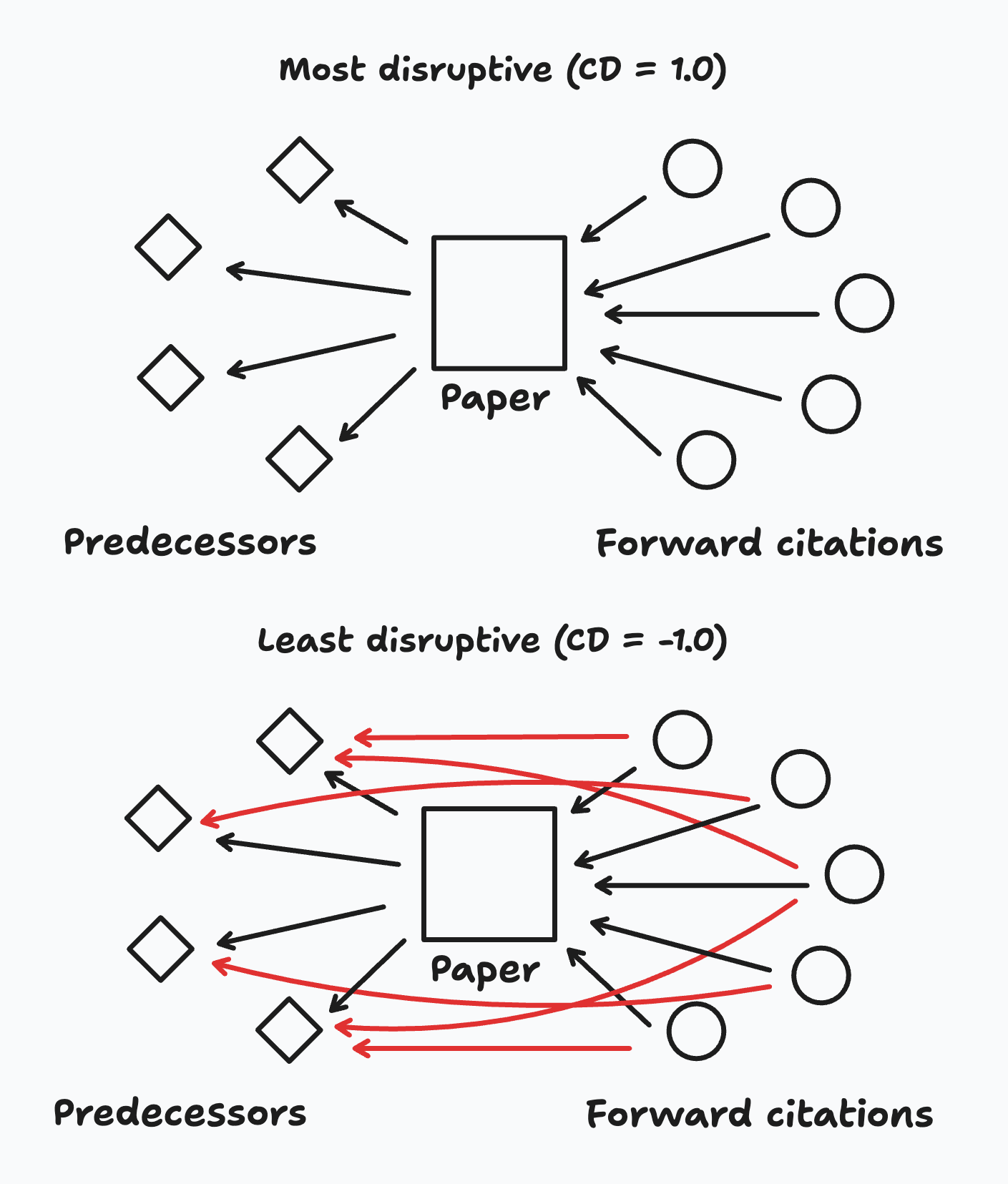

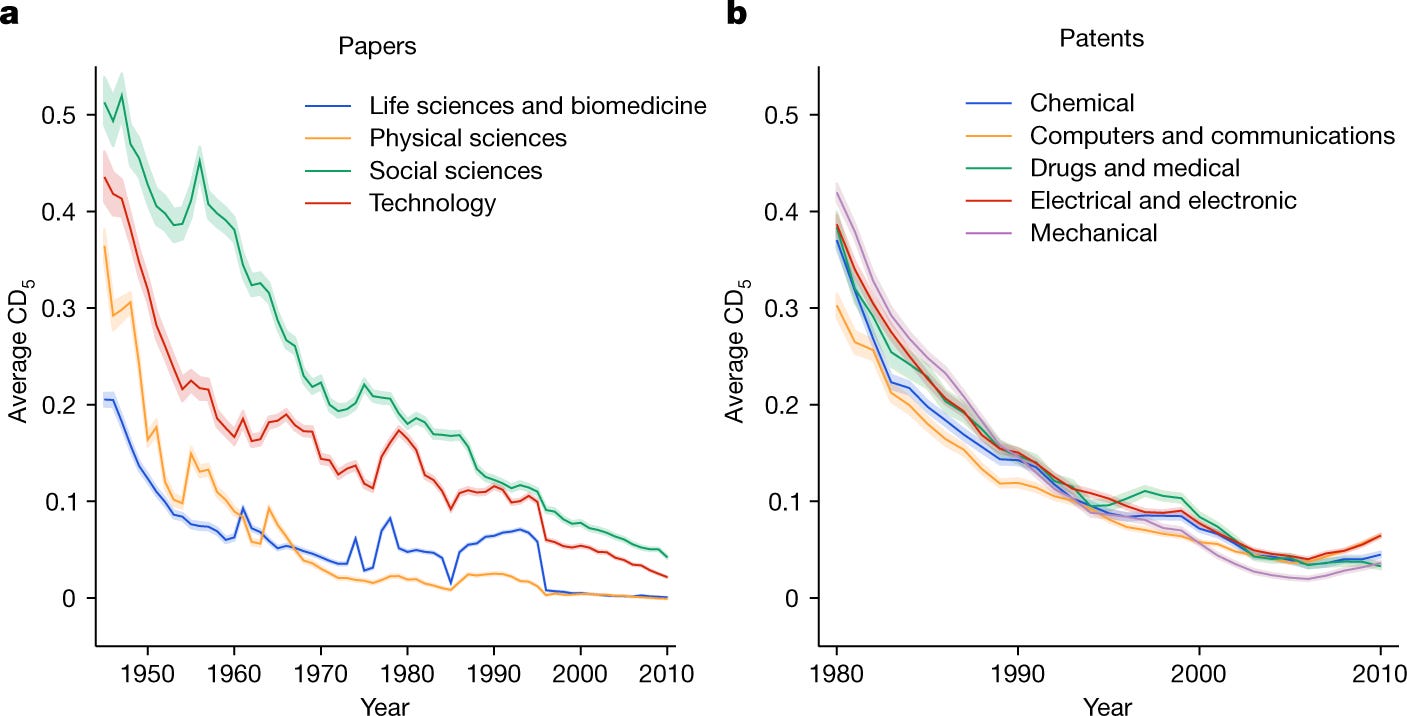

A paper published in January 2023 in Nature found that scientific discoveries have become less disruptive over time. In other words, we seem to have hit a plateau in new knowledge.

This plateau is measured through the CD Index, which captures the percentage of future papers citing a given paper that also cite its predecessors. You can think about it like this: if a paper is disruptive, future citations will stop citing prior discoveries because they’ll now be out of date

Below, you can see what this might look like at the extremes. A paper with a CD = 1 will have all forward citations referencing just itself and not predecessors. A minimally disruptive paper would have forward citations referencing it and its predecessors.

For decades, we’ve seen a decline in average CD across disciplines measured by both papers and patents.

There are a number of potential explanations, including an increasing burden of knowledge1 and that we’ve simply picked all the low hanging fruit.

The effect is clear, regardless of the cause, and the implications are frightening.

How to beat the plateau

Novel discoveries often require novel methods.

Like investing, you may generate some returns if you follow the momentum of what’s working, but the outsized returns come from non-consensus or overlooked ideas.

The methods, strategies, and products that drive step changes in frontier knowledge are similar. There’s only so much juice to squeeze from optimization.

AlphaGo: A case study on beating the plateau

Many people have now heard of AlphaGo’s 2016 match against Lee Sedol, one of the world’s top Go players. But, few appreciate its lasting significance.

Background

Go is a notoriously hard challenge for AI.

With 10^170 board configurations, it has more than the number of atoms in the known universe. This is too many moves to simulate each permutation and choose the best path. Instead, you need to learn to play the game.

Due to these challenges, Sedol boasted before the match that he’d “win in a landslide.”2

The match was a best of five series. AlphaGo won the first match, which was impressive, but nothing truly remarkable had happened yet.

The magic happened in Match Two.

This is where AlphaGo played the famous “move 37.” Initially, this move was seen as a mistake by AlphaGo, as it was a move that a human would never think to make.

Yet, it turned out to be a brilliant strategic choice.

If you have four minutes, watch this video. Sedol is shaken to his core by the move. He takes more than 12 minutes to place his next stone instead of his usual two minutes or less.

Sedol said afterwards:3

“I thought AlphaGo was based on probability calculation and that it was merely a machine. But when I saw this move, I changed my mind. Surely, AlphaGo is creative.”

The significance

The common narrative of the aftermath of AlphaGo v. Lee Sedol is that this loss was so devastating that it caused Sedol’s ultimate retirement. It is true that when he retired, he said “Even if I become the number one, there is an entity that cannot be defeated”4

However, it wasn’t until a recent interview on the Training Data podcast that I learned of another long lasting effect…

…AlphaGo made human players better.

Jim Gao, co-founder & CEO of Phaidra and formerly of DeepMind, said:

“Lee Sedol was the best in his field at Go. He was the world champion for a decade. He was at the top, and his Elo rating was just something outrageous. It was like 2800 or something. It was outrageously high, but it had flatlined, right? So for a full decade, his Elo rating was the same, and there was no one to challenge him because he was already at the top. So once he hit the top, he just kind of plateaued.

And then after AlphaGo happened—and he actually got to play against AlphaGo privately a few more times because DeepMind had let him continue interacting with the system—what happened? For the first time in a decade, his Elo rating started climbing.”

Candidly, despite how good of a quote this is, I couldn’t verify that Sedol himself actually got better in the aftermath. BUT this idea did send me down a rabbit hole and, as it turns out, the essence of the statement still holds true: Go players got better after AlphaGo.

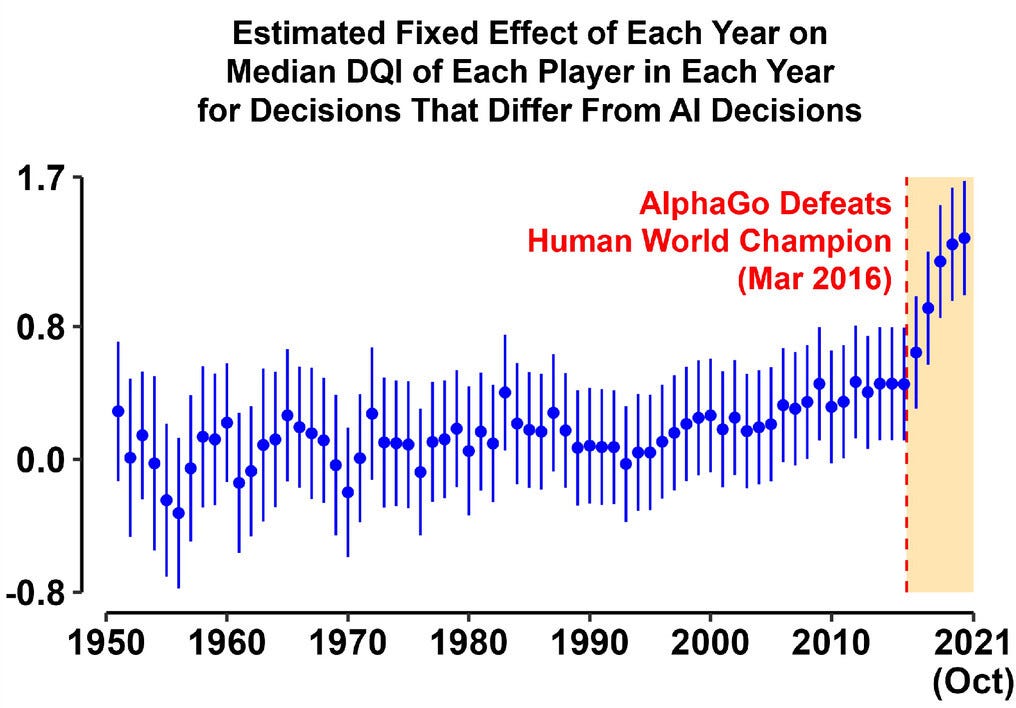

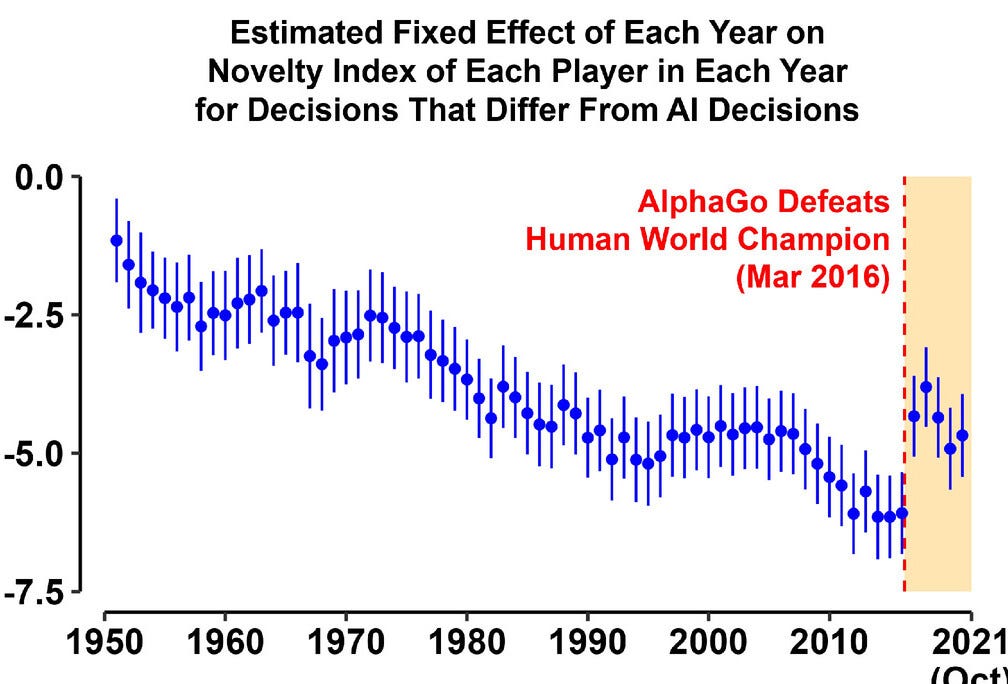

In 2023, researchers analyzed 5.8 million decisions by professional Go players from 1950 to 2021 and used an AI model to grade the quality of each decision.

As you can see, decision quality was stagnant for 60 years before AlphaGo beat Sedol in 2016. Soon after, decision quality increased dramatically…

And, in addition to decision quality, the novelty of human decisions also rose sharply...

This discovery shows that AI breakthroughs can not only advance a field directly but also help humans overcome existing (and often long lasting) performance plateaus.

Beyond board games

As great as it is that we’ve improved human performance in Go there’s ultimately not a lot of economic value created from being better at board games.

Luckily, there are a few other examples from industry that have had similar levels of impact and can show us what else might be around the corner.

Fraud: Stripe

In a recent interview with Logan Bartlett, Will Gaybrick of Stripe described a new transformer model for fraud:

“Just [taking] a giant JSON blob of payments data…stringifying it, turning it into pure text, tokenizing it…and feeding it into a transformer model has led to the ability to [identify fraud] in a way that is completely unintuitive to a human.”

This “unintuitive” approach has led to fantastic results...

“We just take a new payment coming in. Maybe it’s just one of three card testing payments on a site, there’s no spike for us to detect, and, you know, this model will be able to tell us in a way that no hand-curated features ever could that ‘hey, just so you know that one random transaction that doesn’t look suspicious to you is actually very likely part of what is one of these distributed attacks.”

Given Stripe’s position in the market, the intuition of Stripes is likely top 1% in fraud detection. Yet, unbound by human derived features, Stripe was able to meaningfully improve their own fraud detection capabilities.

This out of the box thinking moves the whole field forward.

Datacenters: Phaidra

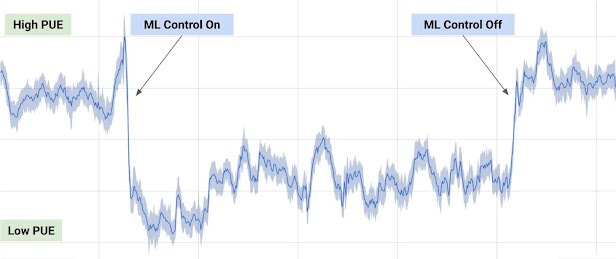

In 2016, Google announced that it had used reinforcement learning to reduce datacenter power consumption by 40%. This is a massive power savings, especially when you consider that Google’s data centers were already state of the art.

Below is a chart of Power Usage Effectiveness that Google shared associated with the breakthrough:

In describing this event, Jim Gao (introduced above) describes “AI creativity” in the system in much the same way as AlphaGo was viewed:

[AI creativity is] the ability to acquire knowledge that did not exist before. And I, of course, experienced this firsthand. The reason why I’m such a true believer in the technology is because I was the expert who helped design the system, but this very AI agent that we created is telling me new things about the system that I didn’t know about before. And that’s a very, very powerful feeling.”

Like with Go, it’s not just the results but the methods that matter. These methods advance our own understanding of the underlying systems.

Spotting the next breakthrough

If we take the examples above to be representative of the opportunity, then there are two places to go looking for breakthrough opportunities: Complex systems and needle-in-a-haystack problems.

Complex systems

Revisiting the plateau in science discussed at the top of this post, the hard sciences present a huge opportunity for AI driven breakthroughs. These are clearly complex systems with massive payoffs to new knowledge. A new material or new protein could create hundreds of billions of dollars of economic value.

Other complex systems, such as manufacturing processes, logistics networks, or chip design are also exciting places to seek breakthrough opportunities.

Needle-in-a-haystack

Stripe’s card testing detection is a great example of finding needles-in-a-haystack. Like in Go, AI models are not bound to the traditional patterns that humans have been trained to spot. Instead, these models can find patterns that humans just see as noise.

Examples here include fraud detection, cybersecurity, weather modeling, mining, genomics and more.

Closing thoughts: Hard problems

Moving a whole field forward is a lofty goal. However, counterintuitively these big ideas are often not that much harder to tackle than smaller ones. In some cases, they can be easier.

Since everything discussed in this post would be classified as a hard problem, I’ll end with this quote by Sam Altman:

The most counterintuitive secret about startups is that it’s often easier to succeed with a hard startup than an easy one.

Let yourself become more ambitious—figure out the most interesting version of where what you’re working on could go. Then talk about that big vision and work relentlessly towards it, but always have a reasonable next step. You don’t want step one to be incorporating the company and step two to be going to Mars

Think big but start small.

The Burden of Knowledge implies that the collective knowledge of every field is now so great that innovators must learn more than ever to reach the frontier. This consumes more of their career and leaves less time for novel discovery

https://www.newscientist.com/article/2079871-im-in-shock-how-an-ai-beat-the-worlds-best-human-at-go/

https://deepmind.google/research/breakthroughs/alphago/

https://www.theverge.com/2019/11/27/20985260/ai-go-alphago-lee-se-dol-retired-deepmind-defeat

Really cool idea